Big export file split up, breaks integration

Hi All,

For a customer of ours, we created many integrations, and one of them now created a big issue down stream, because of the way a big JSON file was splitup by Syndigo.

In the this export:

- 2 entities, that have a parent - child relationship

- parents can have more than 1000 child objects

- Update to any of the child objects cause the parent to be included with all it's valid child objects

- Exports are scheduled 2 times a day

- Syndigo JSON format

- 2000 records in the parent entity

- 65000 records in the child entity

- Exported JSON file is picked up by the customers integration layer, and converted to XML, in a format that replicates the legacy PIM format, that we have replaced with Syndigo.

This all works well, if the export files size stays within the Syndigo max file size limit.

When because of a data cleanup project, 40,000+ objects were exported, the export got too big, and Syndigo split up the export in 9 JSON files. During this export all parent objects were in the last JSON file created, this caused all child objects in the 8 other files to lose their relation with their parent object.

What I'm looking for is:

- Can we create a split, in case of big exports, where we keep parent and child objects in the same file.

- Can we reduce the size of the JSON files, by leaving out data that Syndigo puts in by default but we don't need for our integrations.

For example currently we get these details for an attribute in the JSON:

"characteristic3value": {

"values": [

{

"id": "4_0_0",

"value": "18",

"locale": "en-GB",

"os": "businessRule",

"osid": "computevzacpattributesfromenhancer_businessRule",

"ostype": "businessRule",

"source": "internal"

}

]

},

Where what we need for our integration is only this:

"characteristic3value": {

"values": [

{

"value": "18",

"locale": "en-GB",

}

]

},

Reducing the size is no guarantee that this wont happen again, but it will make it possible to send bigger set in one file/export

In parallel we're looking into other solutions, but these 2 options seem to be the ones that don't require changes to the downstream functionality.

Thank you, and happy with any suggestions,

Erik

-

Official comment

Hi Erik,

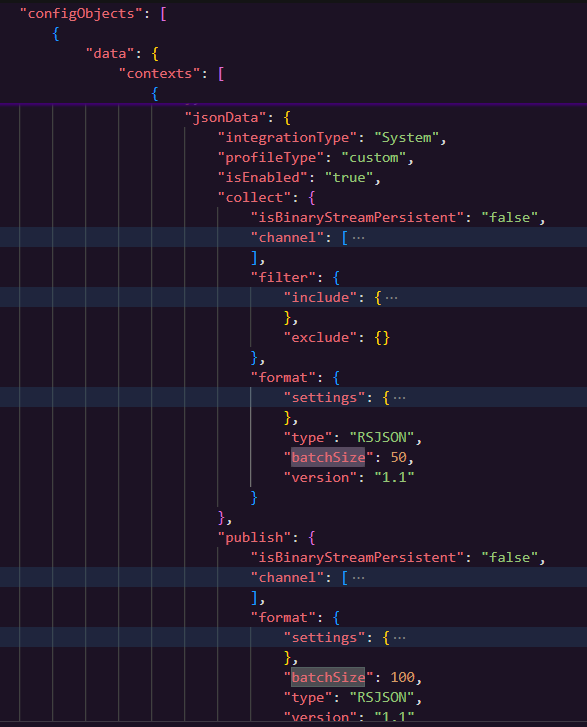

Entity count in a file can be controlled through batchSize. Since this is scheduled export collect profile will play important role here. In the collect profile under publish you can define batchSize and that many parent entity will be available in one file along with related entities and if it exceeds the entity count it will split into multiple file but it will not loose parent child combination.

Regarding shortening the json data by eliminating the syndigo generated content sounds good idea but we do not support this. Kindly raise a feature request for this.

Thanks!

-

Hi Erik

Can we reduce the size of the JSON files, by leaving out data that Syndigo puts in by default but we don't need for our integrations.

For example currently we get these details for an attribute in the JSON:

"characteristic3value": {

"values": [

{

"id": "4_0_0",

"value": "18",

"locale": "en-GB",

"os": "businessRule",

"osid": "computevzacpattributesfromenhancer_businessRule",

"ostype": "businessRule",

"source": "internal"

}

]

},

Where what we need for our integration is only this:

"characteristic3value": {

"values": [

{

"value": "18",

"locale": "en-GB",

}

]

},Unfortunately, no. We requested this several years ago. The response we got back then was "if enough ask for it, it might be done". So, please create a feature request for it.

Regarding your main problem, it might be possible to greatly reduce the batch size of the export profile, so that hopefully the parents and child entities are kept together - I'm not sure that will work, but it might be worth a try.

0 -

Hi Eric,

Thanks for your response, we will create the feature request.

There is not much we can do about the size of the batch, since they can even create a too big batch by 200 child objects, that have different parents with big sets of child objects.

And if this goes wrong it causes a mayor incident.

We'll have a look at redesigning the whole integration, if we don't find a fix for this issue.0 -

Hi Erik

Have you experimented with adjusting the batchSize's?

Below you can see that there are 2 batchSize properties - try adjusting the publish batch size - try the value "2" to see if that restricts the total count or the parent count?

0

0 -

Hi Eric,

Yes we tried that, but we did not manage to have the files split up in such a way the parent and child were always grouped in one file.

0

Please sign in to leave a comment.

Comments

5 comments